Introduction

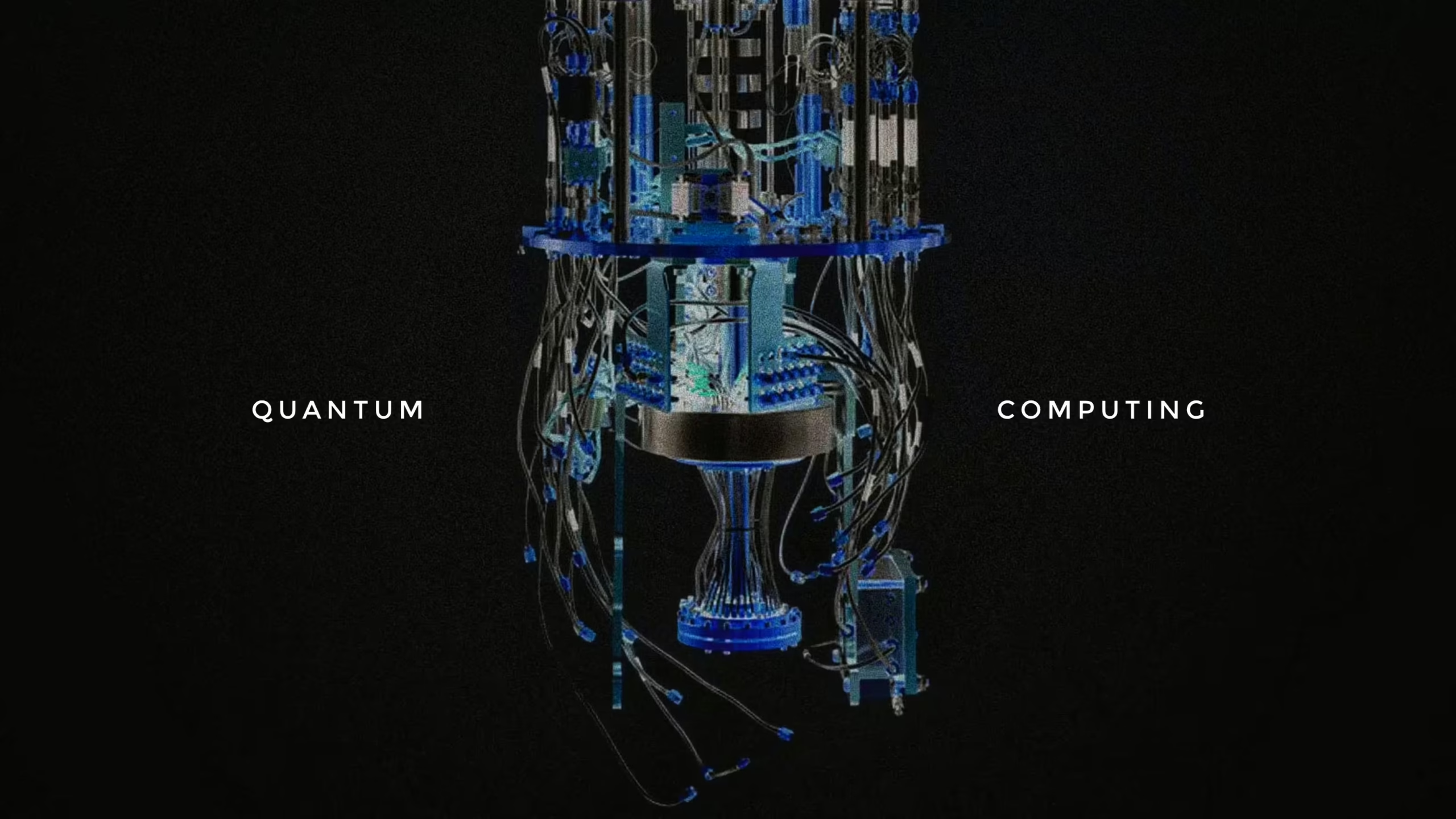

Classical computing has driven humanity’s progress for decades—from the invention of the microprocessor to the modern era of cloud computing and AI. Yet, as Moore’s Law slows and computational problems become more complex, quantum computing has emerged as a revolutionary paradigm.

Unlike classical computers, which process information using bits (0 or 1), quantum computers use qubits, capable of existing in multiple states at once due to the laws of quantum mechanics. This allows quantum computers to tackle problems that are practically impossible for even the world’s fastest supercomputers.

In this blog, we’ll take a deep dive into the foundations, technologies, applications, challenges, and future of quantum computing.

What Is Quantum Computing?

Quantum computing is a field of computer science that leverages quantum mechanical phenomena—primarily superposition, entanglement, and quantum interference—to perform computations.

- Classical bit → Either 0 or 1.

- Quantum bit (qubit) → Can be 0, 1, or any quantum superposition of both.

This means quantum computers can process an exponential number of states simultaneously, giving them enormous potential computational power.

The Science Behind Quantum Computing

1. Superposition

A qubit can exist in multiple states at once. Imagine flipping a coin—classical computing sees heads or tails, but quantum computing allows heads + tails simultaneously.

2. Entanglement

Two qubits can become entangled, meaning their states are correlated regardless of distance. Measuring one immediately gives information about the other. This enables powerful quantum algorithms.

3. Quantum Interference

Quantum systems can interfere like waves—amplifying correct computational paths and canceling out incorrect ones.

4. Quantum Measurement

When measured, a qubit collapses to 0 or 1. The art of quantum algorithm design lies in ensuring measurement yields the correct answer with high probability.

History and Evolution of Quantum Computing

- 1980s → Richard Feynman and David Deutsch proposed the idea of quantum computers.

- 1994 → Peter Shor developed Shor’s algorithm, showing quantum computers could break RSA encryption.

- 1996 → Lov Grover introduced Grover’s algorithm for faster database search.

- 2000s → Experimental prototypes built using superconducting circuits and trapped ions.

- 2019 → Google claimed “quantum supremacy” with Sycamore processor solving a task beyond classical supercomputers.

- 2020s → Quantum hardware advances (IBM, IonQ, Rigetti, Xanadu) + software frameworks (Qiskit, Cirq, PennyLane).

Types of Quantum Computing Technologies

There is no single way to build a quantum computer. Competing technologies include:

- Superconducting Qubits (Google, IBM, Rigetti)

- Operate near absolute zero.

- Scalable, but sensitive to noise.

- Trapped Ions (IonQ, Honeywell)

- Qubits represented by ions held in electromagnetic traps.

- High fidelity, but slower than superconductors.

- Photonic Quantum Computing (Xanadu, PsiQuantum)

- Uses photons as qubits.

- Room temperature operation and scalable.

- Topological Qubits (Microsoft’s approach)

- More stable against noise, but still theoretical.

- Neutral Atoms & Cold Atoms

- Use laser-controlled atoms in optical traps.

- Promising scalability.

Quantum Algorithms

Quantum algorithms exploit superposition and entanglement to achieve exponential or polynomial speedups.

- Shor’s Algorithm → Factorizes large numbers, breaking classical encryption.

- Grover’s Algorithm → Speeds up unstructured search problems.

- Quantum Simulation → Models molecules and materials at quantum level.

- Quantum Machine Learning (QML) → Enhances optimization and pattern recognition.

Applications of Quantum Computing

- Cryptography

- Breaks classical encryption (RSA, ECC).

- Enables Quantum Cryptography (quantum key distribution for secure communication).

- Drug Discovery & Chemistry

- Simulates molecules for faster drug design.

- Revolutionary for pharma, biotech, and material science.

- Optimization Problems

- Logistics (airline scheduling, traffic flow).

- Financial portfolio optimization.

- Artificial Intelligence & Machine Learning

- Quantum-enhanced neural networks.

- Faster training for large models.

- Climate Modeling & Energy

- Simulating complex systems like weather patterns, battery materials, and nuclear fusion.

Challenges in Quantum Computing

- Decoherence & Noise

- Qubits are fragile and lose information quickly.

- Error Correction

- Quantum error correction requires thousands of physical qubits for one logical qubit.

- Scalability

- Building large-scale quantum computers (millions of qubits) remains unsolved.

- Cost & Infrastructure

- Requires cryogenic cooling, advanced lasers, or photonics.

- Algorithm Development

- Only a handful of useful quantum algorithms exist today.

Quantum Computing vs Classical Computing

| Aspect | Classical Computers | Quantum Computers |

|---|---|---|

| Unit of Info | Bit (0 or 1) | Qubit (superposition) |

| Computation | Sequential/parallel | Exponential states |

| Strengths | Reliable, scalable | Massive parallelism |

| Weaknesses | Slow for complex problems | Noise, error-prone |

| Applications | General-purpose | Specialized (optimization, chemistry, cryptography) |

The Future of Quantum Computing

- Short-term (2025–2030)

- “NISQ era” (Noisy Intermediate-Scale Quantum).

- Hybrid algorithms combining classical + quantum (e.g., variational quantum eigensolver).

- Mid-term (2030–2040)

- Breakthroughs in error correction and scaling.

- Industry adoption in finance, logistics, healthcare.

- Long-term (Beyond 2040)

- Fault-tolerant, general-purpose quantum computers.

- Quantum Internet enabling ultra-secure global communication.

- Possible role in Artificial General Intelligence (AGI).

Final Thoughts

Quantum computing is not just a technological advancement—it’s a paradigm shift in computation. It challenges the very foundation of how we process information, promising breakthroughs in medicine, cryptography, climate science, and AI.

But we are still in the early stages. Today’s devices are noisy, limited, and experimental. Yet, the pace of research suggests that quantum computing could reshape industries within the next few decades, much like classical computing transformed the world in the 20th century.

The question is no longer “if” but “when”. And when it arrives, quantum computing will redefine what is computationally possible.