Introduction

The world of air combat is undergoing a fundamental transformation. For over a century, air dominance has relied on large, expensive, manned fighter jets operating from established runways or carriers. But the 21st century battlefield — defined by anti-access/area-denial (A2/AD) environments, electronic warfare, and rapidly evolving AI autonomy — demands a new kind of aircraft.

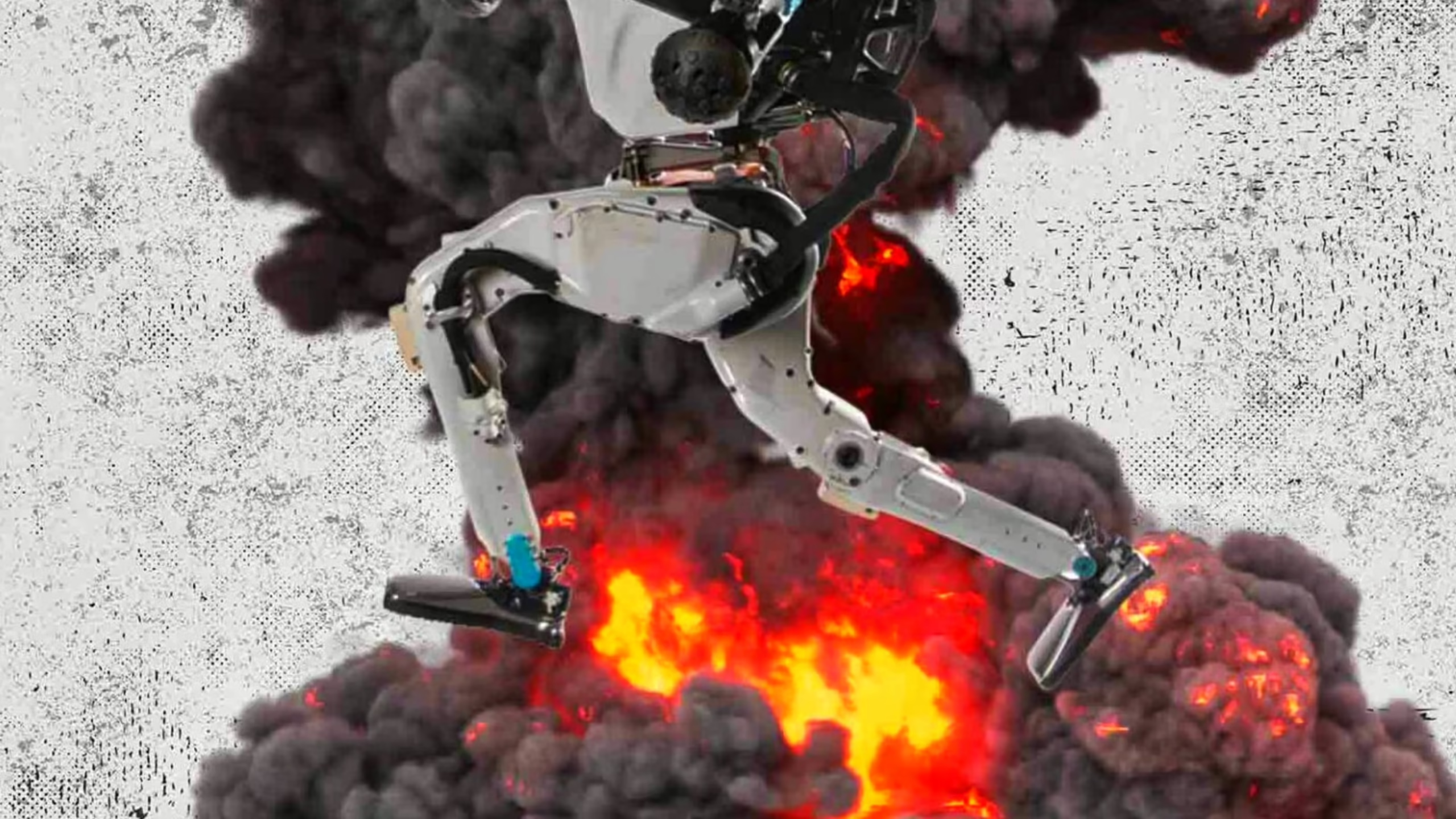

Enter X-BAT, the latest innovation from Shield AI, a leading U.S. defense technology company. Officially unveiled in October 2025, the X-BAT is described as “the world’s first AI-piloted VTOL fighter jet” — a multi-role, fully autonomous combat aircraft capable of vertical take-off and landing, operating from almost anywhere, and flying combat missions without human pilots or GPS support.

Powered by Shield AI’s proprietary Hivemind AI system, the X-BAT represents a bold rethinking of what airpower can look like: runway-free, intelligent, distributed, and energy-efficient. It aims to provide the performance of a fighter jet, the flexibility of a drone, and the autonomy of a thinking machine.

Company Background: Shield AI’s Vision

1. About Shield AI

- Founded: 2015

- Headquarters: San Diego, California

- Founders: Brandon Tseng (former U.S. Navy SEAL), Ryan Tseng, and Andrew Reiter

- Mission: “To protect service members and civilians with intelligent systems.”

Shield AI specializes in autonomous aerial systems and AI pilot software for military applications. The company is best known for its Hivemind autonomy stack, a software system capable of autonomous flight, navigation, and combat decision-making in GPS- and comms-denied environments.

Their product ecosystem includes:

- Nova – an indoor reconnaissance drone for special operations.

- V-BAT – a proven VTOL (Vertical Take-Off and Landing) UAV currently used by U.S. and allied forces.

- X-BAT – the next-generation AI-piloted VTOL combat aircraft, combining high performance and full autonomy.

The Birth of X-BAT: The Next Evolution

Unveiled in October 2025, the X-BAT was developed as the logical successor to the V-BAT program. While the V-BAT proved that vertical take-off UAVs could be reliable and versatile, the X-BAT takes that concept to fighter-jet scale.

According to Shield AI’s official release, the X-BAT was designed to:

- Operate autonomously in GPS-denied environments

- Deliver fighter-class performance (speed, range, altitude, and maneuverability)

- Launch from any platform or terrain — including ship decks, roads, or island bases

- Reduce cost and logistical dependence on traditional runways or aircraft carriers

- Multiply sortie generation — up to three X-BATs can be deployed in the space required for one legacy fighter

This shift is not just technological — it’s strategic. The X-BAT directly addresses a growing military concern: maintaining air superiority in regions like the Indo-Pacific, where long-range infrastructure and fixed bases are vulnerable to attack.

X-BAT Design and Specifications

1. Airframe and Dimensions

While official technical data remains partly classified, available details indicate:

- Length: ~26 ft (approx. 8 m)

- Wingspan: ~39 ft (approx. 12 m)

- Ceiling: Over 50,000 ft

- Operational Range: Over 2,000 nautical miles (~3,700 km)

- Load Factor: +4 g maneuverability

- Storage/Transport Size: Compact enough to fit 3 X-BATs in one standard fighter footprint

The aircraft features blended-wing aerodynamics, optimized for lift efficiency during both vertical and forward flight. Its structure integrates lightweight composites and stealth-oriented shaping to minimize radar cross-section (RCS).

2. Propulsion and VTOL System

A major breakthrough of the X-BAT is its VTOL (Vertical Take-Off and Landing) system, allowing it to operate without a runway.

In November 2025, Shield AI announced a partnership with GE Aerospace to integrate the F110-GE-129 engine — the same family of engines powering F-16 and F-15 fighters. This engine features vectoring exhaust technology (AVEN), adapted for vertical thrust and horizontal transition.

This propulsion setup allows:

- Vertical lift and hover like a helicopter

- Seamless transition to forward flight like a jet

- Supersonic dash potential in future variants

Such hybrid propulsion gives X-BAT unmatched operational flexibility — ideal for shipboard, expeditionary, or remote island operations.

3. Autonomy: Hivemind AI System

At the heart of X-BAT lies Hivemind, Shield AI’s advanced autonomous flight and combat system.

Hivemind enables the aircraft to:

- Plan and execute missions autonomously

- Navigate complex terrains without GPS or comms

- Detect, identify, and prioritize threats using onboard sensors

- Cooperate with other AI or human-piloted aircraft (manned-unmanned teaming)

- Engage targets and make split-second decisions

Hivemind has already been combat-tested — it has successfully flown F-16 and Kratos drones autonomously in simulated dogfights under the U.S. Air Force’s DARPA ACE (Air Combat Evolution) program.

By integrating this proven autonomy stack into a fighter-class aircraft, Shield AI moves one step closer to a future where machines can think, decide, and fight alongside humans.

4. Payload, Sensors, and Combat Roles

X-BAT is designed to be multirole, supporting a range of missions:

| Role | Capabilities |

|---|---|

| Air Superiority | Internal bay for air-to-air missiles (AIM-120, AIM-9X), advanced radar suite |

| Strike / SEAD | Precision-guided munitions, anti-radar missiles, stand-off weapons |

| Electronic Warfare (EW) | Onboard jammer suite, radar suppression, decoy systems |

| ISR (Intelligence, Surveillance & Reconnaissance) | Electro-optical sensors, SAR radar, electronic intelligence collection |

| Maritime Strike | Anti-ship and anti-surface munitions |

All systems are modular and software-defined — meaning payloads can be updated via software rather than hardware redesigns.

Strategic Advantages of X-BAT

1. Runway Independence

Runway vulnerability is one of the biggest weaknesses in modern air warfare. The X-BAT eliminates that constraint, capable of launching from small ships, forward bases, or even rugged terrain — a key advantage in distributed operations.

2. Force Multiplication

Each manned fighter (F-35, F-16, etc.) could be accompanied by multiple X-BATs as AI wingmen, multiplying strike capability and expanding situational awareness.

3. Cost and Scalability

X-BAT is designed to be significantly cheaper to build and operate than traditional fighters. Lower cost means more units — enabling attritable airpower, where loss of individual aircraft does not cripple operations.

4. Survivability and Redundancy

Its small radar cross-section, distributed deployment, and autonomous operation make it harder to detect, target, or disable compared to conventional aircraft operating from known bases.

5. Human-Machine Teaming

The X-BAT’s autonomy allows it to fly independently or as part of a manned-unmanned team (MUM-T) — cooperating with piloted aircraft or drone swarms using AI coordination.

The Bigger Picture: The Future of Autonomous Air Combat

The X-BAT is part of a global paradigm shift — autonomous combat aviation. The U.S., UK, China, and India are all racing to develop unmanned combat air systems (UCAS).

Shield AI’s approach stands out for its combination of:

- Proven autonomy stack (Hivemind)

- VTOL capability eliminating runway dependence

- Scalability for distributed warfare

- Integration with existing infrastructure and platforms

These innovations could fundamentally change how future wars are fought — shifting air dominance from a few high-cost jets to swarms of intelligent, cooperative, semi-attritable systems.

Potential Military and Industrial Applications

| Sector | Application |

|---|---|

| Defense Forces | Expeditionary strike, reconnaissance, autonomous combat support |

| Naval Operations | Shipborne launch without catapult or arresting gear |

| Airborne Early Warning | AI-powered patrols and sensor relays |

| Disaster Response / Search & Rescue | Autonomous deployment in remote areas |

| Private Aerospace Sector | AI flight research, autonomy testing platforms |

Technical and Operational Challenges

Even with its impressive design, the X-BAT faces major hurdles:

- Energy and Propulsion Efficiency:

Achieving both VTOL and fighter-level endurance requires sophisticated thrust-vectoring and lightweight materials. - Reliability in Combat:

Autonomous systems must perform flawlessly in chaotic, jammed, and adversarial environments. - Ethical and Legal Frameworks:

Fully autonomous lethal systems raise questions of accountability, command oversight, and global compliance. - Integration into Existing Forces:

Adapting current air force doctrines, logistics, and maintenance frameworks to support autonomous jets is a complex process. - Software Security:

AI systems must be hardened against hacking, spoofing, and data poisoning attacks.

X-BAT’s Place in the Global Defense Landscape

The X-BAT symbolizes a doctrinal shift in airpower:

- From centralized to distributed deployment

- From manned dominance to autonomous collaboration

- From expensive, limited fleets to scalable intelligent systems

1. Indo-Pacific and Indian Relevance

For nations like India, facing geographically dispersed challenges, the X-BAT’s runway-independent, mobile design could inspire similar indigenous systems.

India’s DRDO and HAL may explore comparable AI-enabled VTOL UCAVs, integrating them into naval and air force operations.

Roadmap and Future Outlook

| Phase | Timeline | Goal |

|---|---|---|

| Prototype Testing | 2026 | First VTOL flight and Hivemind integration |

| Combat Trials | 2027–2028 | Weapons integration and autonomous mission validation |

| Production Rollout | 2029–2030 | Large-scale deployment with US and allied forces |

| Export Partnerships | Post-2030 | Potential collaboration with allies (Australia, India, Japan, NATO) |

The Verdict: A New Age of Air Dominance

The X-BAT by Shield AI is not just another aircraft — it’s a statement about the future of warfighting.

By merging AI autonomy, VTOL capability, and combat-level performance, it challenges decades of assumptions about how and where airpower must be based.

If successful, X-BAT could mark the beginning of a new era:

Where air superiority is achieved not by the biggest, fastest manned jet — but by intelligent fleets of autonomous aircraft operating anywhere, anytime.

Final Thoughts

From the Wright brothers to the F-35, air combat has evolved through leaps of innovation. The X-BAT represents the next leap — one driven by artificial intelligence and physics-based engineering.

With Shield AI’s Hivemind giving it “digital instincts” and GE’s engine technology powering its lift and range, the X-BAT stands at the intersection of autonomy, agility, and adaptability.

As the world’s first AI-piloted VTOL fighter jet, it is more than a technological milestone — it’s a glimpse into the future of warfare, where autonomy, mobility, and intelligence redefine what it means to control the skies.

.

. .

.