For the first time in modern history, many people share a quiet but unsettling feeling: new ideas are getting harder to find. Breakthroughs feel rarer. Progress feels slower. Innovation often looks like recombination rather than revolution.

And yet—at this exact moment—machines are beginning to generate ideas humans never explicitly taught them.

This raises a profound question: Have we reached peak human creativity, and is AI becoming the engine of what comes next?

The Feeling That Ideas Are Running Dry

Across science, technology, art, and business, innovation feels increasingly incremental. Products improve, but rarely astonish. Research papers grow more numerous but less transformative. Even cultural trends recycle faster than ever.

This isn’t nostalgia—it’s a signal. Many domains may be approaching idea saturation, where most obvious paths have already been explored.

The Myth of Endless Human Creativity

We often assume human creativity is infinite. History tells a more nuanced story. Periods of explosive innovation—the Renaissance, the Industrial Revolution, the digital age—were followed by long phases of refinement.

Creativity has never been a constant stream. It arrives in bursts, often when new tools expand what is possible.

Why Modern Problems Are Harder to Solve

Early innovation tackled simple constraints: faster transport, cleaner water, basic communication. Today’s problems—climate change, aging, complex diseases, global coordination—are deeply interconnected systems.

These challenges don’t yield to intuition alone. They require navigating vast, multi-dimensional solution spaces that exceed human cognitive limits.

The Decline of Low-Hanging Fruit

In nearly every field, the “easy wins” are gone:

- Basic physics laws are known

- Obvious chemical compounds are tested

- Simple engineering optimizations are exhausted

What remains are hard ideas—ones buried deep in combinatorial complexity.

Economic Evidence of Slowing Innovation

Economists have observed that:

- R&D spending is increasing

- Breakthrough frequency is declining

- Productivity growth has slowed

In short: we are spending more to get less. This suggests the bottleneck isn’t effort—it’s idea generation itself.

Human Cognitive Limits and Idea Saturation

Human creativity is powerful but constrained by:

- Limited working memory

- Bias toward familiar patterns

- Fatigue and attention limits

- Cultural inertia

As idea spaces grow larger, humans struggle to explore them thoroughly.

The Combinatorial Explosion Problem

Modern innovation spaces grow exponentially. For example:

- Drug discovery involves billions of molecular combinations

- Material science spans enormous atomic configurations

- Design optimization involves countless parameter interactions

Human intuition simply cannot traverse these spaces efficiently.

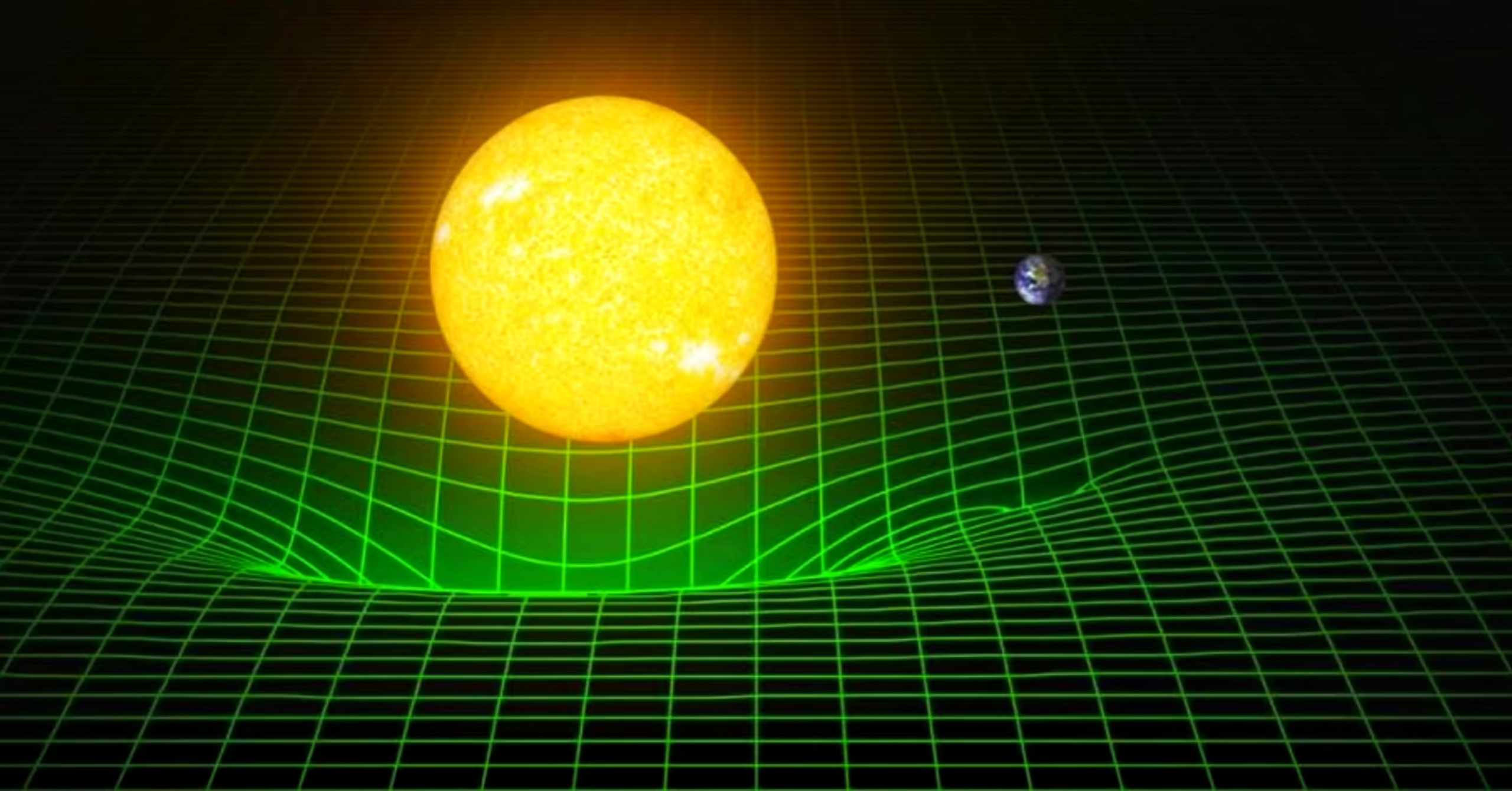

How AI Explores Ideas Differently

AI does not “think” like humans. It:

- Searches vast spaces systematically

- Tests millions of variations rapidly

- Lacks fatigue, ego, or attachment

- Discovers patterns humans never notice

Where humans leap, AI maps.

AI as a Creativity Amplifier, Not a Replacement

AI does not replace creativity—it amplifies it. Humans provide:

- Goals

- Values

- Context

- Meaning

AI provides:

- Scale

- Speed

- Breadth

- Exploration

Together, they form a new creative loop.

Examples of AI Discovering Novel Ideas

AI systems have already:

- Discovered new protein structures

- Found unconventional game strategies

- Identified novel chemical compounds

- Designed unexpected circuit layouts

These ideas were not directly programmed—they were found.

AI in Science: Seeing What Humans Miss

In science, AI excels at:

- Detecting subtle correlations

- Simulating complex systems

- Proposing counterintuitive hypotheses

It doesn’t replace scientists—it expands what scientists can see.

AI in Art and Design

In creative fields, AI explores aesthetic spaces humans rarely enter:

- Hybrid styles

- Unusual compositions

- Novel textures and forms

Humans then curate, refine, and interpret—turning raw novelty into meaning.

The Human Role in an AI-Creative World

Humans remain essential for:

- Choosing what matters

- Judging quality

- Setting ethical boundaries

- Connecting ideas to lived experience

AI can generate possibilities. Humans decide which ones matter.

Risks of AI-Driven Creativity

There are real dangers:

- Homogenization through over-optimization

- Loss of cultural diversity

- Over-reliance on statistical novelty

- Ethical misuse

Creativity without judgment can become noise.

Creativity as Search, Not Inspiration

We often romanticize creativity as sudden inspiration. In reality, it is search under constraints.

AI excels at search. Humans excel at constraints.

This reframing explains why AI is so powerful at idea generation.

How AI Changes the Economics of Innovation

AI dramatically lowers the cost of experimentation:

- Simulations replace physical trials

- Failures become cheap

- Iteration accelerates

This shifts innovation from scarcity to abundance.

Education and Creativity in the AI Age

Future creativity education will emphasize:

- Question formulation

- Taste and judgment

- Systems thinking

- Collaboration with machines

Learning what to ask may matter more than learning how to do.

A New Renaissance or a Creative Plateau?

AI could lead to:

- A creative explosion

- Or shallow overproduction

The outcome depends on how intentionally we guide these tools.

Ethical and Philosophical Implications

As AI generates ideas:

- Who owns them?

- Who gets credit?

- What defines originality?

Creativity may become less about authorship and more about curation.

The Future of Creativity: Human + Machine

The most powerful creative force may not be AI alone or humans alone—but the partnership between them.

Humans bring meaning. Machines bring scale.

Together, they may explore idea spaces humanity could never reach on its own.

Final Thoughts: Beyond Peak Creativity

We may indeed be reaching the limits of unaided human creativity. But that doesn’t mean ideas are running out—it means the method of finding them is changing.

AI is not the end of creativity. It may be the tool that helps us discover what comes after. Not by replacing imagination—but by expanding it.